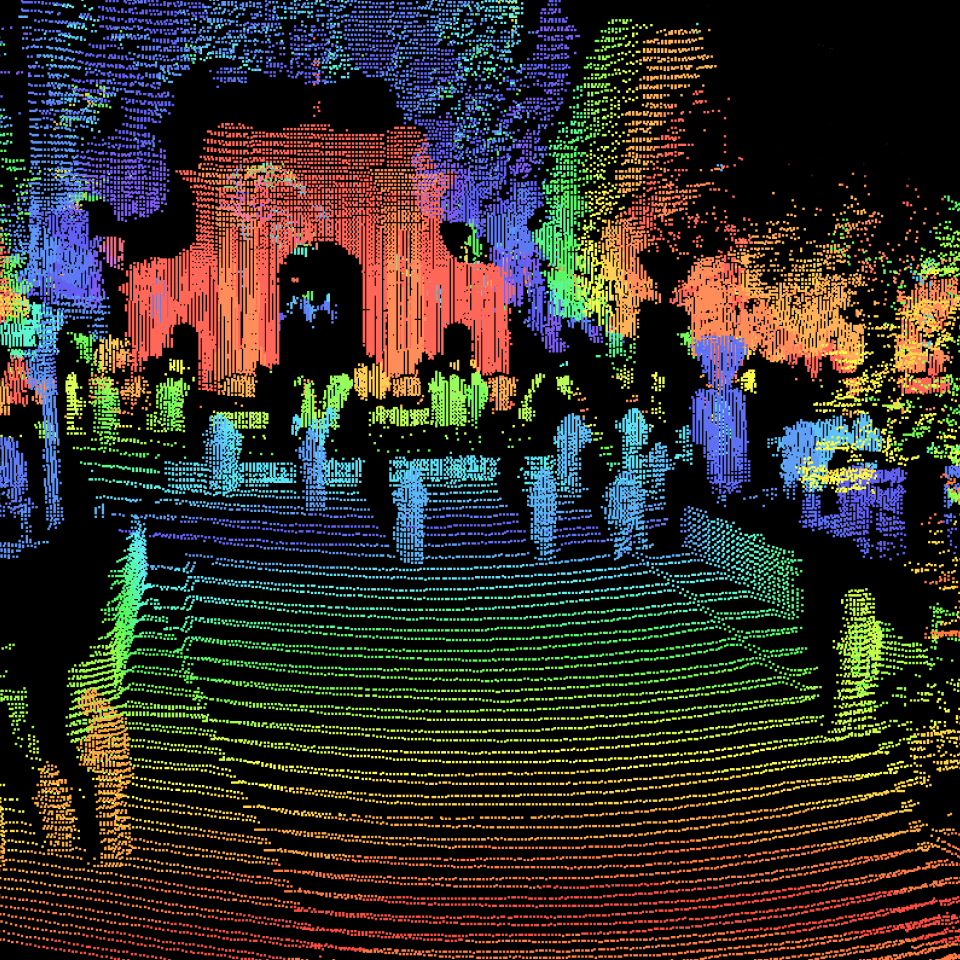

Complex Driving Scenarios in Urban Environments

For PandaSet we carefully planned routes and selected scenes that would showcase complex urban driving scenarios, including steep hills, construction, dense traffic and pedestrians, and a variety of times of day and lighting conditions in the morning, afternoon, dusk and evening.

Pandaset scenes are selected from 2 routes in Silicon Valley: (1) San Francisco; and (2) El Camino Real from Palo Alto to San Mateo.